- Check out our latest Blogs

-

Products

Our Products

We Design, Develop and Manufacture our Products in Silicon Valley, USA.

Niagara Networks™ delivers all the essential building blocks for high-performance visibility across physical and virtual network infrastructures. Our comprehensive portfolio includes Network Packet Brokers, Bypass Switches, Network TAPs, and a unified orchestration layer for seamless visibility and control.

Network Visibility Platforms – MADE in the USACloud VisibilityData Center VisibilityTraffic IntelligenceHigh-Availability & TAPsVisibility OrchestrationOpen Visibility -

Solutions

Our Solutions

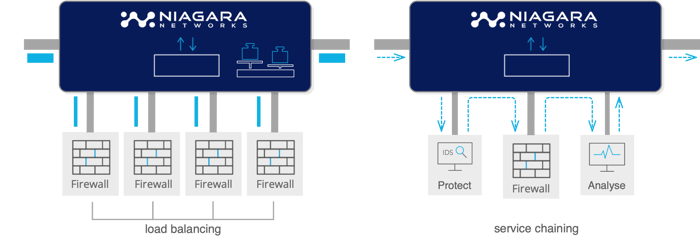

Niagara Networks™ solutions enable NetOps and SecOps teams easily and efficiently operate and administer multiple security platforms and service scale, while reducing operational expenses and downtime.

Network Visibility Solutions for Virtual and Physical Infrastructure What is Network VisibilityNetwork Issues that we SolveSSL/TLS DecryptionNetwork TappingInline Bypass & High-Availability FailoverMobile Subscriber-Aware VisibilitySolution for Hybrid Cloud Observability -

Partners

Our Partners

Niagara Networks™ partners with world-class technology leaders to provide high performance network visibility and security.

Our partners include companies that are part of our technology alliance and companies who take part in distributing Niagara's solutions.

Our Alliance of Technology Innovators & Channels-

Channel Partners

Empowering innovation through strategic

alliances with leading technology providers -

Technology Alliance

Expanding reach and value through a strong worldwide network of channel partners

-

Channel Partners

- Resources

- Support

- Company

- Contact Us

-